Introduction

You have a hot ASP.NET+SQL Server product, growing at thousand users per day and you have hit

the limit of your own garage hosting capability. Now that you have enough VC

money in your pocket, you are planning to go out and host on some real hosting

facility, maybe a colocation or managed hosting. So, you are thinking, how to

design a physical architecture that will ensure performance, scalability,

security and availability of your product? How can you achieve four-nine

(99.99%) availability? How do you securely let your development team connect to

production servers? How do you choose the right hardware for web and database

server? Should you use Storage Area Network (SAN) or just local disks on RAID?

How do you securely connect your office computers to production environment?

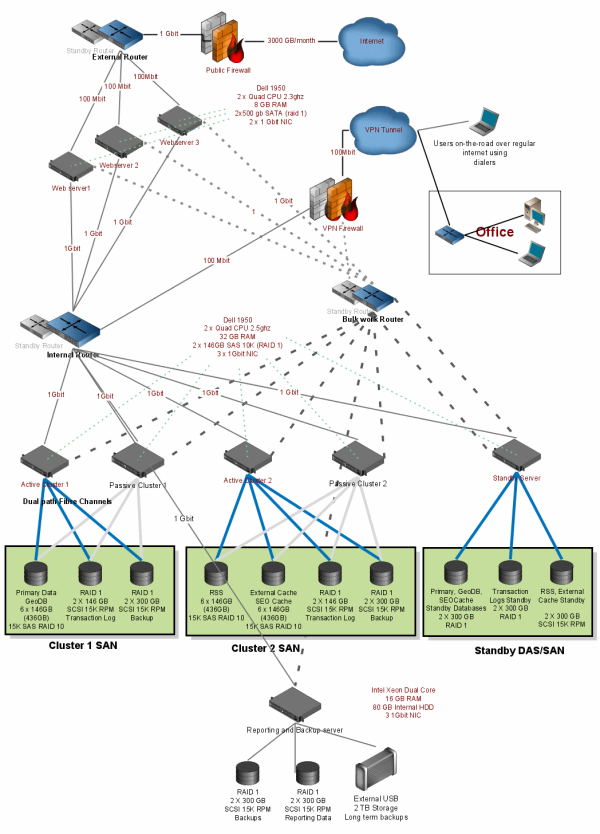

Here I will answer all these queries. Let me first show you a diagram that I

made for Pageflakes where we ensured we

get four-nine availability. Since Pageflakes is a Level 3 SaaS, it’s

absolutely important that we build a high performance, highly available product

that can be used from anywhere in the world 24/7 and end-user gets quick access

to their content with complete personalization and customization of content and

can share it with others and to the world. So, you can take this production

architecture as a very good candidate for Level 3 SaaS:

Click here to download the full diagram.

First a quick overview:

- Web server layer contains three web servers in load balanced mode. Each web

server hosts the exact the same copy of the code and other artifacts of the

ASP.NET web application that we have. IIS 6 is configured exactly the same way.

The web app uses SQL Server based Session and Forms Authentication.

- Web servers talk to SQL Server cluster. There’re two active/passive

clusters. One cluster hosts the main database which has the users, pages and

widgets and the other cluster hosts the other databases like reports, logs,

Geolocation, Cache, RSS etc. We have separated databases based on their I/O load

so that each cluster has more or less the same I/O load.

- Database servers are connected to EMC SAN where we have couple of SAN

volumes. In each cluster, we have a SCSI RAID 10 volume to store MDFs, a SCSI

RAID 1 volume to store LDFs and a SATA RAID 1 volume to store the logs.

- There’s one standby cluster which has a SQL Server Transaction Log shipped

mirror of all the databases. This is for logical redundancy of the databases so

that if we screw up database schema during releases, we have a standby database

to go back to.

I will explain each and every layer and hardware component and the decisions

involved in choosing them.

The Web tier

Public Firewall

Do: Use a firewall connects that connects to the internet

(hosting providers public router or something) and connects to a Router or

Switch that is named External Router. Let’s call this firewall the Public

Firewall (PF)

Why: Without PF, you will have you servers nakedly exposed

to the internet. You might argue, there’s that Windows Firewall, which is

sufficient. But It’s not. Windows Firewall is not built to handle DDOS attacks,

TCP flood, Malformed Packets as efficiently as Hardware Firewalls. There’s

dedicated processor in Hardware Firewall that handles all the filtering. If too

many malformed requests come in, your Web servers CPU will be too busy saving

you from those attacks then doing the real job like running your ASP.NET

code.

Do: You should only have port 80 and 443 open on this. If

you aren’t using SSL, then have only port 80 open. Don’t keep remote desktop

port open on public firewall.

Why: You should not expose your servers to the internet for

remote desktop. If someone captures your remote desktop session, while you are

administering your server from Starbucks over insecure Wifi, you are doomed.

Remote Desktop access should be available only from VPN.

Do: Never open FTP port.

Why: FTP is insecure, it transmits username and password in

plain text. Anyone with a basic knowledge of hacking can capture the username

and password when you log into your production server via FTP. If you must, then

use FTP that requires no username and password and allows you to only write

files to a specific folder and never read anything or even see the list of files

on FTP Server. This way, you don’t expose server credentials to anyone and you

can at least transmit files to web servers when needed. Also ensure there’s disk

quota enforced on the volume where you are uploading file and bandwidth is

strictly limited. Otherwise someone might use the full bandwidth to upload giant

files and make you run out of disk space.

Do: Never open file sharing ports

Why: File Sharing Service is one of the most vulnerable

port. It exposes critical services of Windows which can be compromised to run

arbitrary code on your server and destroy it.

External Switch with Load Balancer

Do: The external switch has load balancing capability that

evenly distributes traffic to your web servers. This means, every web server you

have put up behind the load balancer gets even share of the total traffic. Each

of your web server has a NIC that connects to this switch. This is your public

connection. If your traffic is within 10 million hits per day, a 100Mbit switch

and same speed NIC is sufficient. You can choose a Firewall that has Load

Balancer capability and has enough ports to connect all your webservers.

Why: The separate NIC is used to transmit only website

traffic over a dedicated network connection so that it does not get hampered by

any other local traffic. The switch does the load balancing for you. Do not use

Windows Network Load Balancing. Again, the idea is to keep your web servers to

their job only – execute ASP.NET Code, call Database, return html pages and

static files, and that’s it. Leave everything else to other components. Hardware

Load Balancers are very efficient, much more fault tolerant. If you go for

Software Load balancing, you will periodically have to restart servers to solve

mysterious problems like a server not teaming up with others, or a server

getting too much traffic and so on.

Do: Keep load balancer dead simple, do only basic round

robin traffic distribution, no fancy affinity, no request header parsing.

Why: We tried doing some affinity and URL filter to serve

specific URL from specific server. It was a nightmare. We spend countless hours

over the phone with Cisco Support Engineers to troubleshoot mysterious

behaviors. They delivered endless patches, that fixed one bug but introduced

another. The affinity thing was showing mysterious behavior, putting a server

out of load balancer was still delivering traffic to it and so on. We tried URL

filtering where some specific URL pattern like www.pageflakes.com/company gets

served from a specific web server. But this required Load Balancer to open up

every request, parse it, find the URL and then match against a long list of URL

patterns and decide where to send the traffic. This was a bad decision. It

caused Load Balancers to choke on high traffic, overflow its internal error

buffer, frequently reset itself to clear up all the jam in its head and so on.

So, we learned a very valuable lesson that once a Microsoft Architect told us

but we were too smart to ignore him – “Keep your load balancer dead simple – use

basic round robin.”

Do: Each router and firewall has a standby one.

Why: When any of these device fails, the standby one kicks

in. If you don’t have a standby public firewall, then when your firewall goes

down, your website is completely down until you get a replacement firewall.

Similarly when the External Router/Switch is down, your website is down as well.

So, it’s wise to have a standby Firewall and Switch/Router available. It’s hard

to spend for a device that’s idle 99% time, but when it saves the day and keeps

your site from going down for hour, it feels good to be wise. Moreover,

sometimes you will have to upgrade firmware of firewall or router. Then you will

have to restart the device. If you restart it, that means the traffic can no

longer come through it. So, in such case, you bring up the standby one as

online, then patch up and restart the primary one and then bring up the primary

one as online and then patch up and restart the standby one.

Do: Each of your web servers has another NIC, that connects

the web servers to the private network where your databases are. In the diagram,

it’s the Internal Router or Internal Switch.

Why: Do not use the same NIC to connect to both private and

public network. You don’t want public network traffic to congest your local

network traffic. Generally the nature of traffic on public network and private

network is completely opposite – public network makes less frequent long burst

of traffic transfer (delivering large HTML response to browsers) where private

network makes high frequency short burst traffic transfer (making short and

frequent database calls).

Do: Get moderately powerful Web servers with enough storage.

We chose Dell 1950 servers as web server that has 2 x Quad Core with 8 GB RAM

and 3 x NIC. There’re four 500 GB SATA drives in two RAID 1.

Why: The C: volume is on one RAID 1 that has the OS and the

D: volume is on another RAID 1 that has the web application code and gigantic

IIS logs. You might think why we need 500 GB on OS partition. We have MSMQ and

there’re some very large queue that sits on the C: drive. You might also think

why such large space on D: drive. When you turn on cookies on IIS long, due to

ASP.NET’s gigantic forms authentication cookie being sent over every request and

getting logged, IIS logs tend to get really big. You might be producing 3 GB of

IIS per server on a moderate traffic website. It’s better to keep enough space

on Web server to store several weeks worth of IIS logs incase your internal

systems to move those logs to somewhere else for reporting gets broken.

Do: Run 64bit version of Windows.

Why: Without 64bit OS, you cannot fully utilize the 4 GB RAM

or more than that. In fact, you can use around 3.2 GB even if you have 4 GB RAM

available on a 32bit Windows. There are some addressing issues on 32bit

processor, especially with PCI controllers which uses some of the addressing

space above 3.2 GB to map to devices. So, available RAM to windows is below 3.2

GB. But 64bit OS allows you to use terabytes of RAM. The 64bit version of .NET

framework is stable enough to run heavy duty applications. You might have bad

experience running 64bit Windows on your personal computers, but 64bit servers

are pretty solid nowadays. We never had a glitch in last two years of

operation.

Do: The internal network is fully 1Gbit. All the network

cables are Gbit cables, the router/switch are Gbit capable. The NICs on the web

servers that connects to the internal switch are GBit as well. Spend as much as

you can on this configuration because this needs to be the fastest.

Why: This is where all the communication from your web

server to database servers go through. There will be very frequent data

transmission and a lot of data transmission over these connections. Every page

on your website might make 10 to 100 database calls. There will be short and

frequent transmissions per page. So, you need this entire private LAN

connectivity to be very very fast and low latency.

Wait for the Bulk work router. I will get that to it soon.

The VPN connectivity

On the right side of the diagram shows the VPN connectivity. There’s a VPN

capable firewall that connects your office and roaming users to the internal

router. From internal router, users can get into web servers and the database

servers. This sometimes need to be fast when you will do a lot of file transfer

between your office and production environment. For example, if you pull down

gigabytes of IIS logs every day from the production servers to the office, then

you need pretty good connectivity on this. Although the bottleneck will always

be the internet connection you have at your office. If you have a T1 connection

from your office to the production environment, then 10Mbit Firewall, Switch and

cables are good enough since T1 is about 1.544Mbps.

Why: Why you need VPN? Because it is absolutely important

that you only allow connection to your production environment through an

encrypted channel. VPN is the only way to ensure the connection from your

computer to your production environment is absolutely secure and no one can

eavesdrop or steal your session. Moreover, having a VPN means there’s another

layer of username+password based security that must be required to get into your

production environment. This means, even if your server’s credentials are

stolen, may be you had them stored in a USB keychain, no one can still connect

to your production servers unless they are connected to VPN.

Do: Have a site-to-site VPN from your office network to your

production environment. Use a router that has site-to-site VPN capability.

Why: Connecting to VPN using a VPN Client Software is a

pain. Connection gets dropped, it’s slow as it’s software based encryption and

so on. Then there’s that Vista issue where most of the VPN client in the world

does not work. So, having a router always maintain a secure connection to your

production environment is a great time saver. Especially when you transfer large

files from between your production environment and your office computers.

Do: Have a router at office that separates developer and IT

workstations from other workstations

Why: If you have site-to-site VPN configured on a router,

everyone connected to that router has access to production environment. You

don’t want your Sales guys Laptops to get access to production environment,

trust me. So, you must isolate the computers that are highly trusted like IT and

Devs workstations and isolate other computers in the network in different

subnets. Then you only allow the trusted subnet to get access to production

environment. You can setup rules in a good router that will let only a specific

subnet to send traffic to production server IPs. If you have staging servers

locally inside your office, you should take the same precaution. Only trusted

subnet should be able to see them. This extra precaution helps avoid unwanted

spread of all the new virus and worms that sales guys bring in to the office

carrying on their laptops.

The Database tier

The database servers sit behind the Internal Router/Switch. You might want to

put the database servers on a very secure network by isolating the web servers

in a DMZ, which stands for Demilitarized Zone. But we don’t use DMZ Firewall

since it becomes the greatest bottleneck for all traffic between web and

database servers. So, we use routers and allow only SQL Server’s port 1433 to

pass through the router from a web server to any DB server. You can also use

switches if you aren’t so fanatic about security. My logic is, protect each

server by properly patching them and using security best practices that ensures

the servers themselves remain protected all the time. If someone can get into

the web server by compromising it, the connection strings are there in a nice

readable web.config file. So, hackers can use that to connect to your

database and do enough damage, like running a DELETE * FROM aspnet_users.

So, blocking ports, putting web servers on DMZ does not really help much. Of

course, it’s my opinion based on my limited knowledge of hackers ability.

Do: Database servers need to be really beefy servers having

as much CPU power as possible and very fast disk controllers and a lot of

RAM.

Why: SQL Server does both disk and CPU intensive work. So,

the more CPU you can through at it, the fasters queries execute. Moreover, the

disks need to be really fast. You should definitely use SAS with 15K RPM disks.

For local disks, having a lot of memory on Disk Controller makes a lot of

difference. Also put as many L2 cache memory as possible, at least 4 MB.

Do: Store MDF and LDFs on separate physical disks.

Why: Most of the reads are from MDF and writes are to LDF.

So, you need to put them on separate physical disks so that read and writes are

performed in parallel, without disturbing each other. Moreover, when

transactions are first applied to LDF and then applied to MDF. So, MDF has quite

some writes too.

Do: Use RAID 10 for MDF or high read scenario.

Why: RAID 10 is fastest RAID configuration for reading. As

the data is distributed in many disks, reads are done in parallel. As a result,

instead of reading bytes sequentially from one disk, RAID controller reads bytes

simultaneously from many disks. This gives faster read performance.

Do: Use RAID 1 for LDF or high write scenario.

Why: Since RAID 1 has only two disks, there’s less disks to

write data to. As a result, better write performance than other higher RAID

configurations. Of course, RAID 0 is fastest, but it has no redundancy. So,

there’s no question of using it on a database where data corruption causes

severe problems.

Do: Use SAS drives for OS partition

Why: Sounds expensive, but SQL Server does a lot of paging

when sufficient RAM is not available. Since page files reside on local disks, it

needs to be as fast as possible. Otherwise paging performance suffers from disk

speed.

Do: Always perform backup and log shipping to different

physical disks, preferably on RAID 1

Why: Backup takes a lot of writes to disk. Same goes for

transaction log. The reads are always from MDF. So, it’s important that the

backup writes go to different physical disk(s). Otherwise during backup, the

continuous read along with regular read/write on MDF will choke SQL server. We

had this problem that whenever we did a full backup, at the very last moment,

when SQL Server locks the entire database to write the very recent transactions

that happened since the backup started, the database was locked too long for

queries to timeout and website to throw SQL Timeout Exceptions. The only way to

speed up the whole last minute locking issue was to make sure the read from MDF

gets done really fast and the write on backup gets done really fast. So, we

spread the MDF on more disks using RAID 10 volume and we spread the write on

less disks using a RAID 1 volume.

Do: Use Windows Clustering for high availability

Why: Windows Clustering is a high availability solution that

is usually configured having one active server and one passive server. Both

server has SQL Server installed. But at a time, only one remains active and

connected to a shared storage – SAN. Whenever active server fails, the Cluster

administrator automatically brings the passive server online and connects the

SAN volumes to it. This way, you get minimum downtime since the overhead of

switching to another server is just the time to start SQL Server on passive

server and loading the database from recovery. Usually the passive server comes

online with a 80 GB database within 5 mins. During failover, it just has to

rollback the uncommitted transactions in LDF.

But the catch of Windows Cluster is you must have SAN. Local disks won’t do.

This takes you to next recommendation:

Do: Use Storage Area Network (SAN)

Why: Performance, scalability, reliability and

extensibility. SAN is the ultimate storage solution. SAN is a giant box running

hundreds of disks inside it. It has many disk controllers, many data channels,

many cache memories. You have ultimate flexibility on RAID configuration, adding

as many disks you like in a RAID, sharing disks in multiple RAID configurations

and so on. SAN has faster disk controllers, more parallel processing power and

more disk cache memory than regular controllers that you put inside a server.

So, you get better disk throughput when you use SAN over local disks. You can

increase and decrease volumes on-the-fly, while your app is running and using

the volume. SAN can automatically mirror disks and upon disk failure, it

automatically brings up the mirrors disks and reconfigures the RAID.

But SAN has some serious concerns as well. Usually you don’t go for dedicated

SAN because it’s just too expensive. You get some disks from an existing SAN at

your hosting provider. Now hosting providers serve many customers from the same

SAN. They give you space from disks that might be running database of another

customer. For example, say the SAN has 146GB SAS disks. Now you have requested

100 GB SAS storage from them. This means they have allocated you 100 GB from a

disk and the rest 46 GB has gone to another customer. So, you and the other

customer is reading and writing to the same disk on the SAN. What if the other

customer issues a full backup at 8 AM? The disk is pounded by enormous writes

while you are struggling for every bit of read you can get out of that risk.

What if the other customer has a stupid DBA who did not optimize the database

well enough and thus producing too many disk I/O? You are then struggling for

your share of disk I/O. Although the disk controllers try their best to throttle

read and write and give fair share to everyone using the same disk, but things

aren’t always so fair. You suffer for others stupidity. One way to avoid this

problem is to buy space from SAN in terms of physical disk size. If SAN is made

of 146 GB SAS disks, you buy space in multiplication of 146 GB. You can ask your

Hosting Provider to give you full disks that aren’t shared with others.

Do: RAM, lots of RAM

Why: SQL Server tries its best to keep the indexes on RAM.

The more it can store on RAM, the faster queries execute. Going to disk and

fetching a row is more than thousand times slower than finding that row

somewhere from RAM. So, if you see SQL Server is producing too many reads from

your MDFs, add more RAM. If you see Windows is doing paging, add more RAM. Keep

adding RAM until you see disk reads get stable at some low value. However, this

is a very unscientific approach. You should measure “Buffer cache hit ratio”

performance counter of SQL Server and “Pages/Sec” counters to make better

decision. But rule of thumb, have RAM of 2 GB + 60% the size of your MDF. If

your MDF is 50 GB, have 32 GB RAM. The reason behind this is, you don’t need all

of your MDF to be in RAM because all the rows are not getting fetched every now

and then. Generally 30% to 40% data are logs, or audits or data that gets

accessed infrequently. So, you should figure out how much of your data gets

accessed frequently. Then derive the RAM amount from that. Again, the

performance counters are the best way to find if your SQL Server needs more

RAM.

Do: Get Dual Path Fiber Channel connection to SAN from your

servers

Why: Dual Path Fiber channels are very fast and reliable

connectivity to SAN. Dual Path means, there are two paths, if one fails, I/O

requests go through another path. We tried this manually. We asked our Support

Engineer to manually unplug a channel while we were running the web app and the

application did not show any visible problem. Since SAN is like a network

devices that is far away from your servers, it’s absolutely important that you

have 100% reliable connectivity to SAN and you have backup plan in case of

connectivity failure.

Reporting and Backup

Do: Have a separate server for moving IIS logs from

webserver

Why: You need to parse IIS logs to produce traffic reports,

unless you are using Google Analytics or some other analytics solution that is

not dependent on IIS logs. You should never run any reporting tool to analyze

the IIS log on the web server because they consume CPU and disk load. It’s best

to have a batch job that copies daily IIS log to a separate reporting server

where the analysis is done.

Do: Have a large detachable external storage like External

USB drives

Why: During development, it is often necessary to bring in

large amount of data like database backup from production environment to your

local office. Sometimes you need to move large amount of data from some staging

environment to production. Transferring the large amount of data over the

network is time consuming and not reliable. Best to just ship the external USB

drive containing the data. You can take a production database backup, store it

in USB with strong encryption and ship the USB drive to your office.

Do: Use fast SCSI RAID 1 disks

Why: Reporting and backup requires large disk I/O. When you

are moving data out of webserver or database server, you want the job to get

done as fast as possible. You don’t want it to be delayed for poor disk on your

reporting/backup server. Besides analyzing large amount of data takes time,

especially the IIS logs or restoring database backup for analysis. So, the disks

on the reporting server needs to be very fast as well. Moreover, if you have

some catastrophic failure and your database is gone, then you can use the

reporting server as a temporary database server while your main database server

is being built. Once our admin deleted our production database from main

database server. Fortunately we had a daily backup on the reporting server. So,

we restored the daily backup on reporting server, made it the main database

server for a while until we prepared the real database servers with the

production database.

Separate NIC and Switch for bulk operations

Do: Use separate NIC on all servers that is connected to a

separate private network via separate switch for bulk operations

Why: When you do a large file copy, say IIS log copy from

web server to reporting server, the copy operation is taking up significant

network bandwidth. It’s putting stress not only on the private NIC that does the

very high frequency communication with database servers, it’s also sending a lot

of load on the private switch, which is already occupied with all the traffic

produced by all the web servers and database servers combined. This is why you

need to do large file copy operation like IIS log copy or database backup copy

over a separate NIC and separate switch connected to a completely separate

private LAN. You should map network drives on each server on the separate

network IP so that any data transferred to those drives go through the dedicated

NIC and switch for bulk operations. You should also connect this bulk operation

network to the VPN so that when you download/upload large files from your

office, it does not affect the high priority traffic between web servers and

database servers.

Conclusion

The above architecture is quite expensive, but guarantees 99.99%

availability. Of course, the availability also depends on the performance of

your Engineers. If they blow up the website or delete a database by mistake,

there’s no hardware that can save you. But having enough redundant hardware can

ensure you get a fast recovery and get back to business before your VCs notice

you are down. The architecture explained here can cost you about $30K per month

on a managed hosting environment. So, it’s not cheap. You can of course buy all

these hardware on credit and the monthly payment should be around $20K per month

for about two years. So, it’s up to you to decide how much do you want to

compromise between hardware and availability. Based on what kind of application

you are running, you can go for smaller infrastructure yet guaranty 99.99%

availability. Once we were very tight on funding and ran the whole site on just

4 servers still came out with 99.99% availability for three months. So, it all

comes from experience that gives you the optimal production environment for your

specific application needs.