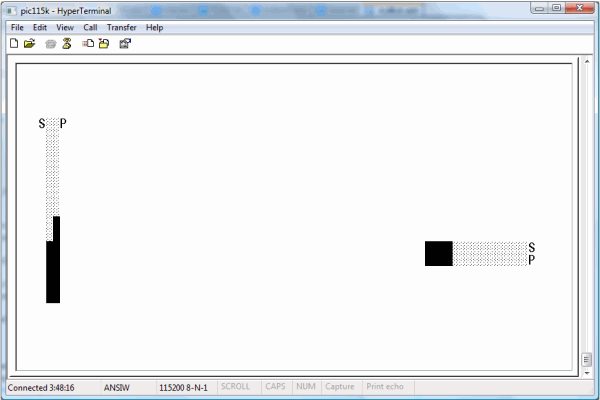

Figure 1: Image of the GUI for the default 2-dimensional application; the system is executing a "down" maneuver in the "Y" dimension,

while holding steady in the "X" dimension.

Introduction

The article at hand describes a set of techniques for the construction of networks of one or more Microchip Technology "PIC"

8-bit microcontrollers, in which each processor exercises cybernetic control over a single dimension (or degree

of freedom) using a PID (Proportional - Integral - Differential) control algorithm.

A complex control application, such as a robot, consists of several such PID control loops. These can run in parallel with each other, as is seen in the demonstration circuit

built for this article. In this circuit, an "X" dimension and a "Y" dimension are controlled, using similar means, but independently of each other.

It is also possible to connect control loops in series. On many boats and aircraft, for example, the task of controlling vehicle heading can be modeled by a PID loop commanding

rudder position. The heading control PID loop's output is a rudder position (e.g., in degrees), selected in an effort to achieve a user-designated heading setpoint. The positioning

of the vessel's rudder, though, often requires cybernetic control in its own right; the physical hardware may expose the ability to move the rudder to the right, or to the left,

for example, along with giving the ability to sense rudder position, without exposing any way to directly command a specific rudder position. It is in such situations that the concept

of connecting control loops in series becomes relevant.

A real cybernetic control application can thus rely on many control loops, and to be able to subdivide a complex control system in a one-processor-per-loop manner offers considerable

appeal. A one-loop-per-CPU design like the one presented in this article provides an easy answer to many design questions

that would otherwise accompany a concurrent multiprocessor system like the one described in this article. It is not easy to take a real problem and subdivide it definitively among

a handful of CPUs. Any architecture that offers this prospect deserves, the author hopes, a second glance.

The article at hand demonstrates that this simple, dimension-per-CPU approach to parallelism can indeed be made to work very well. A full hardware implementation is described,

and it is one that exercises obvious control over movement toward a setpoint in two dimensions, and does so in a way that is robust with respect to changes in the physical system.

Its scalability has been tested to 32 user-visible degrees of freedom, and basically unlimited internal PID loops. A man-machine interface based on an ANSI terminal GUI and an analog

joystick is provided.

The parallelism system employed here (one processor per control loop) is admirably simple; but one risk in anything so simple as the parallelism scheme just described is that

it will become overly simplistic. It is demonstrated below that, in many obvious ways, at least, this is not the case here.

A simplistic design can betray itself in several ways. Most obviously, it is possible that such a design will simply not function well, but the demonstration device built

in the article holds position well, and adapts admirably to environmental change. When powered on, for example, the demo application motor quickly and accurately moves its main

sliding assembly to the default position, and subsequent position moves commanded by the joystick are obeyed in similar fashion. This works well on some disparate hardware,

even before tuning constants (which are floating-point values) are adjusted. The associated man-machine interface is simple but intuitive and graphical, and is reliably rendered

at a high - and deterministic - frame rate of 6 frames-per-second.

From a specification standpoint, the analog inputs and outputs associated with the control system have a 10-bit resolution, the serial I/O performed by the processors occurs

at the RS-232 spec maximum of 115,200 baud, all PID calculations are done using floating-point numbers, and the system can be cheaply and quickly tuned in the field using

floating-point constants.

Beyond superficial performance, and beyond nominal specifications, though, an overly simplistic design can carry with it economic problems. Most design processes can simply buy

adequate performance using hardware overkill, but this is not the approach taken here. To say that doing all of the things described above using one PIC processor per control loop

seems like a very efficient result is a subjective assessment. This assessment, though, is one that more objective measures support. There is simply not much wasted space in this

design. A single PIC 16F690 cannot be expected to control more than one motor of the bi-directional sort used here, for example, because it cannot be configured to output two different

analog signals at once. For one such device to handle everything related to a single PID loop therefore does represent full employment of the device, from a standpoint of analog output count.

Furthermore, the 4 kiloword program memory of each PIC is over 90% filled by the code presented; several floating point operators are implemented in 8-bit machine language in support of the controller itself, and the largely 16-bit algorithms required are implemented in 8-bit PIC assembly language. This is an assembly language that lacks hardware multiply and divide instructions.

The existence of such an application using 8-bit PICs is, the author hopes, impressive; the prospect of being able to multiplex said application in scalable fashion is,

it is further hoped, even more impressive. It is also hoped that the economic implications of doing so using microcontrollers that retail at well under $2.00 per unit (along with some

cheaper discrete components) are especially impressive.

Finally, readers can rest assured that the design offered here rests on solid theoretical foundations already laid by the same author. As discussed in the next section,

the scalable architecture offered here derives from two key components: the very well-tested SFP real number type,

and the "Scrapnet" synchronous network. Each of these components receives an extensive and rigorous treatment

in its own article. Here, suffice it to say that both of these free components1 exemplifies the deterministic nature

of PIC code - even PIC code exhibiting substantial parallelism, and that this is a design direction which is expanded in this latest submission.

Background

To develop an application like this one from the integrated circuits up requires a great deal of underlying low-level work. The floating-point data type used here, "SFP",

and the operator functions required, are documented in this predecessor article. The multiprocessor serial networking scheme

employed, "Scrapnet", was similarly presented in another previous submission. The circuit presented here is a direct expansion of the circuit

shown in that article.

"Scrapnet" itself was an expansion of yet another article, where the real basics of wiring a PIC to a terminal are presented. In the interest

of brevity, the most basic of setup questions are better-addressed in these articles than they are here.

Using the Code

The GUI provided is shown in the first picture presented above. It is based around two bar graphs per dimension: one with the label "S" (for "Setpoint"),

which shows where the user commands the demo assembly to position itself, and another bar graph with the label "P" ("Position"), which shows the current actual position.

The joystick is used to make changes to the setpoint. If the joystick is held toward the right, for example, the horizontal "S" bar graph will move to the right in response,

and "P" will follow (if everything is working) as the motor control system causes the system position to move toward the setpoint.

The GUI code provided is designed for broad applicability. In the full two-PIC demonstration, the left CPU (if one views the circuit board with the processors' "pin 0"

at the front) renders a GUI based on vertical bar graphs, while the other CPU renders one that uses horizontal bar graphs. These are based on the 0 to 1023 range output by the 10-bit DAC,

with provisions for scaling the range of the bar graph to match the real range of position values attainable. Of course, in many practical applications this simple, general approach will

not be adequate. The 0 to 1023 range might need to be scaled to match user expectations. A 0-360 degree range would most likely be appropriate for a heading controller, for instance, perhaps

in conjunction with a circular, compass-like presentation. A simple rudder positioning system might have a GUI very similar to the one provided here, although even in this case some

concept of a center point would need to be introduced.

The "Scrapnet" protocol assigns a station number to each participating processor, based on the order (in the timer 1 period) in which the processors transmit.

In the demo application, the left processor is station 0 and the right processor is station 1. In general, each CPU in a complex control application implemented using the architecture

described here will run a PID loop, but only the CPUs that render a portion of the application GUI will have a "Scrapnet" station number. For example, in a serial control

loop application, the GUI might show graphics related to vessel heading, but not show graphics directly show rudder position. As detailed in the introduction, though, the rudder

positioning task would likely have a dedicated CPU - and control loop of its own - in such an application.

A reader interested in actually constructing the demonstration circuit should begin by following the (very detailed) instructions in this article

to get a basic PIC-to-terminal serial link working. This article also goes over issues of notation, especially as they relate to schematic diagrams and more details "rat's

nest" diagrams.

Subsequently, a reader engaged in the construction of the demo circuit described in this article must construct the demonstration board described

in the "Scrapnet" article. This article describes how to set up a grouping of PICs sharing a common clock and a common serial bus,

and gives a "rat's nest" diagram for a two-PIC demonstration board, along with source files for the necessary firmware for each processor. The photoresistor specified

in the "Scrapnet" demo should be omitted; in the control application described in this latest article, a second joystick axis is connected in its place.

The "rat's nest" format used in the "Scrapnet" article, and continued here, models a widely-available breadboard configuration (Radio Shack Part No. 276-002).

Other necessary supplies include jumper wires; Radio Shack part no. 276-173 is a suitable jumper wire kit. A 12 megahertz oscillator, an analog joystick, a PIC programmer, and some

diodes, resistors, and (for the full demo) capacitors are also necessary. These are all fairly commonplace items, but specific suggestions for their acquisition are given

in the "Scrapnet" article, where applicable, or later in this article.

The Radio Shack jumper wire kit closely matches the suggested breadboards. The kit contains wires of assorted length which can simply be pushed into the breadboard's holes

to make a connection. Connections of two sorts can be made. For a neat layout, the jumper wires can be laid flat against the breadboard. In dense areas of the circuit, these

connections are oriented mostly at right angles to its rows of holes. If this is done, and the wires are not crossed over each other, the resultant connections can be translated

into signal paths on a single-layer PCB (printed circuit board). Occasionally, though it will prove helpful

to make some long or problematic connection using a true jumper wire, i.e. one that protrudes up in the air in messy fashion. This is a design compromise, since these connections

will need to remain as jumper wires if the design is translated to a PCB. In the design described below, non-crossing PCB-style paths are used to a great extent, but are augmented

by a few loose jumpers, particularly in the interfaces between subsystems.

The next "rat's nest" diagram shown below represents the "Scrapnet" demo circuit, as built by the author on the suggested breadboard. The photoresistor used

in the final "Scrapnet" demo is omitted, as specified above, and is in fact replaced by a connection to a second joystick axis. This circuit design can be built

on a breadboard, but it can also be built using the suggested ICs and discrete components in conjunction with a single-layer printed circuit board. and just one jumper wire. (This is the

long red wire, which is part of the "Scrapnet" data line.)

Figure 2: "Rat's Nest" diagram for the "Scrapnet" multi-CPU joystick demo

Note that, if the suggested joystick (Radio Shack part no. 26-3012B) is used, then the "Y" axis signal wire will be green, the "X" axis wire will be brown,

the joystick-to-ground wire will be black, and the joystick connection to positive voltage will be red. A picture of the analog joystick used by the author is shown below:

Figure 3: The author's analog joystick

As in the "Scrapnet" article, the synchronous, multiprocessor bus relies on a "go" button to establish a starting point for timing purposes. Diodes are used

to shunt the digital signals used to manage the flow of data between processors, and resistors are used to create weak connections to ground for analog signals, in an effort

to establish a proper "zero" point. Readers unfamiliar with such topics, or curious about the rationale behind any aspect of this circuit, will find answers in the

two predecessor articles already amply cited here.

The image beneath this paragraph shows this same circuit in the standard format for electronic schematics. As was done in the "Scrapnet" article, the "Scrapnet"

bus is highlighted in red. For simplicity, the programming harness is not shown in this diagram. (It is shown in the "rat's nest" diagrams, since these are intended to guide

actual construction in detail.)

Figure 4: Electronic schematic for the "Scrapnet" multi-CPU joystick demo

To run the twin PID demo properly, it is necessary to program both PICs. This simple process used to make the PICKit2 program a CPU is detailed in this

predecessor article. Here, processor runs a variation of the same firmware. Either chip's firmware can be built from file "multibot.asm", using build script

"make.bat". The PIC actually being targeted by the build is determined by preprocessor constants. If MBOT_STAT1 is defined, then processor 1 (the processor for

the "X" dimension, in the demo application) is being targeted. A similar constant, MBOT_STAT0 is associated with CPU 0 (the processor for the "Y" dimension).

As an alternative to building the demonstration code, either of the necessary binary files can be obtained from the demo archive supplied at the top of the article. This archive

contains two files, each of which was built for a designated processor (0 or 1). These files are named to indicate the processor targeted by each.

The code at hand provides complete support (e.g. GUI support) is only two processors. However, the overall architecture used is designed to scale naturally to configurations with

more than 2 processors. If a third processor were desired, for example, constant MBOT_STAT2 could be created. The developer would then need to add implementation code bracketed within #ifdef MBOT_STAT2 / #endif pairs. Some sort of user interface code would have to be provided, and this is application-specific.

In cases where the implementations for MBOT_STAT2 through MBOT_STAT15 are repetitive in their construction (e.g. in the timing code necessary to stagger transmissions

according to "Scrapnet" station number), these are provided in the supplied code.

Mechanical Setup

The demo circuit described in the last two diagrams is sufficient to render the demo GUI, and to accept joystick input and indicate it in the form of setpoint changes in the GUI bar graphs.

Once the PICs have been wired up and programmed as described above, the absence of the circuitry and apparatus associated with the physical control aspect of the demo will

not prevent the GUI from rendering if power is applied (at a PIC-friendly voltage level) and the "go" button is pressed.

However, the scope of this article extends well beyond simply pushing bar graph indicators around. The next "rat's nest" diagram shown below expands the simple

joystick / networking demo circuits developed thus far into a real cybernetic control circuit, and in particular into a device that controls position in one or (if fully constructed)

two dimensions.

The actual implementation as built by the author is adequate for development purposes, e.g. for purposes of developing firmware. In its construction, a CD-ROM drive from a desktop

computer was deconstructed, and the sliding assembly used to position the drive head was removed and mounted on what would normally be its right edge, to provide a left-to-right dimension

to control.

In the diagrams of the motor amplifier circuit presented here, the connections to the motor are shown simply as two wires, without reference to voltage levels or direction.

In practice, the sort of DC motor for which this amplifier is suited can accept positive voltage at either terminal, with the other terminal connected to ground. Depending on which

terminal is powered and which is grounded, the motor will run in either of two opposite directions. These directions will be clockwise and counter-clockwise at the motor shaft.

With the full demo assembly in place, they will be left and right.

The power supplied to the motor can be varied to affect the torque output by the motor, which does allow for more precise control. Ultimately, the amplifier circuit described below

translates the analog output of the PIC into an analog motor command signal, although there is a threshold voltage below which the motor will not move at all.

The position sensing system used in the author's demo application consisted of a photoresistor and a lamp. The lamp was simply positioned at one end of the travel of the CD-ROM head

assembly, and the photoresistor (e.g., Perkin / Elmer part no. VT90N2) was glued to the head itself, aimed parallel to the travel of the unit. This provided a stable, and linear, position

sensing circuit, despite the obvious potential problems presented by ambient light. In practice, the application lamp ends up being by far the most influential actor on the position-sensing photoresistor. The author used a 12-volt DC automotive bulb (Federal Mogul part no. BP3157) as a lamp, but many 12-volt bulbs will work.

A photograph of this sliding motor assembly, with its interface leads exposed, is shown below. Each of these leads is labeled in this picture, as is the moving assembly whose position

is controlled by the PID loop, and the photoresistor that is connected to the position sensing circuit. The specifics of each lead's connection to the rest of the circuitry is explored

in the remainder of this article. Finally, in considering the picture below, remember that the apparatus shown has only one degree of freedom. A full two-dimensional demo would need two

such devices (or, at least, additional hardware of some sort).

Figure 5: The test motor / position sensor assembly; another Radio Shack breadboard is used as a base.

New Circuitry

Unlike the 'go' and joystick signals, the position signal does not use a pull-up resistor, but rather a pull-down connection to ground. The position signal, at least in the optical

system used here, tends to have a higher minimum value than the purely electrical systems used for the joystick and 'go' signals. If nothing else, this will be the case due to noise

from ambient light. If this were eliminated via some sort of shielding mechanism, a pull-up might become advisable.

Beyond the motor, moving assembly, lamp, and photoresistor, an amplification circuit is necessary in order to drive an electric motor. The PIC cannot perform such a role on its own.

In addition, the demands of the DC motor will outstrip the ability of the PICKit 2 programmer (or similar device) to supply power. The next figure shown below presents the power and

amplification circuitry necessary for control of a DC motor, with the motor drive circuitry dedicated to the dimension control command pins of CPU 1:

Figure 6: Complete application for control of a single dimension

The additions to the demo board shown in the diagram above are largely confined to a new motor control board built on a smaller Radio Shack breadboard (Part #276-003) and positioned

to the right of the original board. This new board also takes care of providing power, and is constructed around a few discrete components.

First, two "7805" voltage regulators are used to split a single +12 volt DC input into two independently regulated 5-volt buses. In addition, a matrix of transistors is used

to amplify the low-power command signals emitted by the PIC into a higher-power signal viable for the control of a small DC motor. The motor power signal, in fact, is the sole current

sink for one of the 7805s. The other 7805 is devoted to supplying the two CPUs and all of the associated components on the original demo board (which were, in prior submissions, powered

from the PIC programmer). The use of independent voltage regulators serves to reduce the impact of motor-related noise on the power supply being fed into the CPUs. While this design is

effective enough, it ought to be noted that it does not provide true circuit isolation in the fullest sense, in that the CPUs and the motor ultimately do share a common ground.

The lamp is fully isolated if wired as shown in the diagrams above. This final level of separation keeps motor moves from causing the light to dim, which would represent

an undesirable form of positive feedback (which amounts to movement away from the setpoint, or at least a tendency

toward such movement).

The last diagram shown documents the circuitry necessary to power the CPUs and run the "X" motor. In a full 2D application, it would be necessary to wire something similar

to CPU 0 as well, for the "Y" dimension. The necessary circuitry parallels what is shown in the last diagram. However, it is possible to omit the voltage regulator that powers

the CPUs from the "Y" circuit. The 7805 shown on the "X" circuit power board is sufficient to power the CPU portion of the board, including both CPUs and

the associated TTL and analog hardware.

The diagrams above do not differentiate between the two motor terminals in any way, and this introduces the possibility of reversing the two wires that connect the amplifier

and the motor. In the circuit described here, one way to test for reversed terminal connections at the drive motor is to connect the jumper wire that normally connects to pin 5

of the PIC directly to positive voltage instead. This should result in movement toward the right, or more specifically in movement in the direction toward which the position signal

tends to increase. More generally, pin 5 is the positive direction command signal, and pin 6 the negative direction command signal, for the firmware provided. At least, this is the case

if one uses positive values for the PID tuning constants (KD, KI, and KP).

Finally, it should be mentioned that an analog filter on the position input signal will often enhance the overall performance of the system. There is no software filtering in the

firmware as provided. In the demo circuit built by the author, a 180 picofarad capacitor, of the Mylar disc type, was included for filtering purposes. One end of this capacitor was

connected to the position input before it arrives at the CPU, and the other end was connected to ground. This serves to smooth out spikes and other excursions in the position input signal.

The capacitor is not shown in the diagrams presented here, in the interests of simplicity and of generality.

Physical Systems

As demonstrated in the last section, the extension of the basic "Scrapnet" demo board already described into a cybernetic control system relies on connections

to pins 5 (analog signal out, back/right), 6 (analog signal out, forward/left), and 15 (position). The reader wishing to apply the controller described here to some physical system

other than the author's sliding drive tray motor and photoresistor will need to focus on these pins - on each CPU - in customizing his or her application. Some applications will

wish to accept input from some sort of device other than a joystick, and in such cases design decisions relating to pin 12 will also be necessary.

In some cases, the production application will ship with hardware features (such as a plugs, sockets, terminals, or wires) allowing the end user, or an installation technician,

to connect whatever might be necessary. Whether the author's setup (5-volt TTL "left" and "right" command signals, plus 5-volt TTL joystick and position inputs)

is adequate or not will depend on the exact product. One commercial, off-the-shelf marine autopilot with which the author is familiar outputs a single analog signal ranging

from -10 volts DC to +10 volts DC, per rudder, or a similar signal ranging from 4 to 20 milliamps of DC current. The developer attempting to extend the work presented

here into such a millieu will therefore have some analog circuit design to do. The basic foundation provided here, though (e.g., the PID code, the SFP and HLOE libraries,

the "Scrapnet" protocol and network circuit), will remain valid.

Amplifier

In the demo / development application provided, each CPU has two outputs, which are assumed to effect opposite actions (e.g. up and down, left and right, or clockwise

and counter-clockwise2). This is not strictly necessary for all PID applications, though. It is certainly possible to command actuator position using a single analog signal,

although overall signal resolution is correspondingly reduced.

The two analog outputs emanating from each dimension's PIC, in this example application, are directed to two "TIP31" transistors. These are high-gain components,

which serve to convert the DC signal coming from the CPU to a corresponding signal of higher current. Each TIP31 takes its power supply from the dedicated 5-volt bus used exclusively

for motor supply.

In the amplifier circuit shown above, there is a "left" TIP31 and a "right" TIP31. When the PIC applies its relatively weak current to the collector pin

of either TIP31, motor current is applied from the emitter of that TIP31 to one or the other terminal pins of the motor, resulting in movement in one of two possible directions.

When current is supplied to one terminal of the motor, the other terminal must be connected to ground in order for any motor movement to actually occur. This is handled using

two more transistors, which are activated using a small portion of the motor drive signal emitted from each TIP31's emitter.

These transistors used to ground out the motor are smaller "2222" transistors, which require less current to operate than the TIP31. This allows the majority

of the motor drive signal emitted from the TIP31 to actually get applied to the motor drive, instead of getting wasted on making the ground connection. A smaller transistor

is workable as a pathway to ground, since the large power loss inherent to the motor implies that less current will be making its way back to ground than was originally conducted

through the (larger) TIP31.

The schematic below shows the transistor network used to generate a single motor's drive signal:

Figure 7: Motor drive amplifier schematic

Firmware Design

The Higher-Level Operating Environment

The PIC code provided relies on a modular runtime library already largely exposed in the SFP article

and the "Scrapnet" article. In the file names and identifiers used here and in those articles, this library is referred

to as HLOE (High-Level Operating Environment).

One difference between this article and its predecessors is that the application code provided here consists of just one compilation unit. The file "multibot.asm"

is a free-standing, single-file entity, whereas the "Scrapnet" and SFP demos made use of multiple .ASM files. The switch to a single .ASM file was made in this

article because, otherwise, the build time for the application would be lengthy, due to the fixed costs associated with spawning a new MPASM process for each file and other

fixed costs associated with each assembly language file. The real effects of this file composition change are, fortunately, minimal. In all three code bases,

each function resides in a dedicated code page, and the semantics associated with calling these functions are the same. Much of the HLOE library code is identical across

all three articles, other than its consolidation into a single file here.

As before, HLOE consists not just of a library, but also of a calling convention and two stack implementations. These stacks reside in static RAM, and are distinct from

the hardware stack used to hold return addresses. HLOE has been designed for concurrency, and in particular to support applications where interrupts are enabled 100% of the

time (after some constant, initial setup time). This aspect of HLOE is necessary, for example, to achieve the exact timing necessary for participation in the "Scrapnet" bus.

The two HLOE stacks are operated upon using macros (PUSH and POP for stack 0 and KPUSH and KPOP for stack 1). Stack 0 serves

are the parameter stack for HLOE library functions, as well as for what amount to "user" functions in "multibot.asm". Stack 0 also holds

automatic (or "local") variables3 during function execution. In the code provided here,

stack 1 is used chiefly to hold base pointers into stack 0 during each parameterized function call. Because of the length of the stack 1 routines, they also can be called

as functions (vs. emitted as a whole macro). The function names are kpop and kpush. Finally, note that any unqualified references to "the stack"

in the discussion below refer to stack 0.

The central nature of the stack in this application is evident in some macro declarations near the top of "multibot.asm". These are essentially constant definitions.

Among them are the PID tuning constants KP, KI, and KD. However, in the stack-based architecture used here, these

constant declarations consist of snippets of code that emit a constant 16-bit SFP floating point value onto the stack. These macros are inserted into the assembly language in the

remainder of "multibot.asm" as necessary, in order to put a particular constant atop the main stack, for calculation purposes.

All of the user-tunable constants exposed here take this form, and this reflects the fact that all of the analog sensing and signal generation done here uses the 16-bit SFP real

number type for storage and processing. A few of these declarations are shown below:

K_SUB_P macro

movlw .68

PUSH

movlw .5

PUSH

endm

K_SUB_I macro

movlw .32

PUSH

movlw .252

PUSH

endm

K_SUB_D macro

movlw .122

PUSH

movlw .11

PUSH

endm

The formulas given in the comments attempt to translate the two byte-push operations directly evident in these snippet declarations into floating point numbers in more traditional,

decimal form. Note that each byte pushed is expressed as a decimal value from .0 to .255. While somewhat unfamiliar, the adjustment of these constants in the field

or laboratory is actually not that difficult, if one simply treats the first number pushed as a fine tuner, and the second as a coarse tuner. This allows for 256 "coarse"

settings and 256 "fine" settings within each of these. Only 128 of the "fine" settings are actually useful; KP, KI,

and KD should be positive numbers of any magnitude. These will consist of a 0 to 127 first byte pushed (mantissa) and a 0 to 255 second byte pushed (exponent).

More information about the SFP type and notation can be obtained from the article Minimalist Floating-Point Type,

and the section of this article dedicated to system tuning also discusses these topics.

Note that single byte constants exist as well. Their construction is similar to the SFP constants, but with only one PUSH operation. An example is shown below:

JOY_CHANNEL_IN macro

movlw .0

PUSH

endm

The prominent role of macros in the architecture described in each of these articles works well for the processors used. The limited depth of the hardware call stack

implies that the use of function calls must be well-controlled, or return instructions will simply stop working. Macros provide an alternative to function calls,

for abstracting over repetition in the code. The tradeoff is that macros end up using more code storage, but the 16F690 actually has a fairly ample code storage area.

At 4,096 14-bit words (compared to its 256 bytes of static RAM), its code storage is one of the 16F690's strengths. The macro-based design described in this article exploits this strength.

The content of "multibot.asm" can be divided into two general regions - "user" and "kernel". The "user" portion of the code

consists of the event handler, a main task, and user-defined functions. This is the portion of the code in which the PID calculations are implemented. These parts of the code

call into the kernel extensively, but they are very different from the "kernel" code in their composition and style. An example is the use of higher-level structures

like dynamic allocation that is apparent throughout "user" code.

The kernel portion consists of the bookkeeping code required for parameterized function calls and for context switching, plus a defined set of functions comprising the HLOE kernel;

these are listed below:

Table 1: HLOE Kernel Functions Used in This Application

printu: Prints an unsigned byte, in decimal format (ASCII / serial)graphx: Draws a horizontal bar graph (ANSI terminal)graphy: Draws a vertical bar graph (ANSI terminal)mulf: Performs SFP multiplicationdivf: Performs SFP divisionaddf: Performs SFP additionandu: Performs an unsigned byte logical ANDgtf: Compares SFP floats, returns boolean byteandb: Performs a boolean AND operation on two bytes (non-zero is true)add: Adds bytes (signed or unsigned)printch: Prints an ASCII charactercopyf: Copies the SFP real number value atop stack 0parm: Accesses function parametersutof: Converts an unsigned byte to its SFP equivalentftou: Attempts to convert an SFP value to its unsigned byte equivalenteq: Tests bytes for equalitysetbit: Sets a single bit of a byte (and returns the result)clearbit: Clears a single bit of a byte (and returns the result)iszerof: Returns non-zero if and only if the SFP parameter is 0.0dispose: Discards the value atop the main stack

Note that, although there is no SFP subtraction function, mulf and addf can be combined to perform subtraction. The second or right-hand operand

of the subtraction must be negated by multiplying it by -1.0, and then addition must be performed.

These are not all of the HLOE functions, in the broadest sense; the "Scrapnet" demo code base, for example, contained functions not present in this latest offering,

such as the night function, which applies a low-light palette. The SFP code base, of course, contains other floating-point

operations (e.g., powf and logf).

The macros defined in "hloe.inc" (and in "kernel.inc", which it includes) are also part of the kernel. In addition to the macros associated with the two stacks,

this file contains the FAR_CALL macro, which is used to call functions while properly managing the high bits of the program counter. (The technique used

is an old one - see this source.) Finally, the PREEMPT and RESUME macros are provided

to facilitate context switching. These macros save, and restore (respectively) all of the pointers and other registers associated with the execution context, so that the execution

of the ISR can occur without disrupting the main task.

The division between "user" code and "kernel" code is useful as an architectural distinction, since each of these two portions of the overall code base

has a distinct design. The way in which "user" code is designed is intended for the object code of a higher-level language, or at least for higher-level techniques,

whereas the way in which "kernel" code operates is designed for optimized assembly language code. From an extensibility standpoint, it is possible to construct a wide

variety of other application programs by writing new, higher-level "user" code around the same "kernel" code.

HLOE Notation

Something similar to the Hungarian notation seen in low-level Windows programming is present

in the identifiers listed in Table 1. Here, though, a system of suffixes is used, instead of the prefixes present in Hungarian notation. This was viewed as less invasive.

Making the type-determined part of the identifier a suffix downplays it compared to the specific, programmer-selected part of the identifier, and this is appropriate,

in the author's view, for this particular application at least. These suffixes are limited to one letter.

The naming conventions described here apply for three major categories of names. These three categories were estimated to be the most relevant

to the developer writing HLOE "user" code. First, the HLOE "kernel" functions are named in this way, which allows these names to convey a great deal

of information concisely and unambiguously. This role is evident in Table 1 above, most basically in the distinction between functions like add (for byte data)

and addf (for floating point data).

Incidentally, this role in "kernel" function naming is not shared with true Hungarian notation. Few Windows API developers, inside or outside of Microsoft,

have ever used Hungarian notation to name their functions. Formal parameters for the Windows API are sometimes named using Hungarian notation prefixes, at least in the documentation.

Also, certain lower level aspects of the Windows / Intel architecture, in particular the mnemonics of Intel assembly language, do follow similar patterns, with varying degrees of consistency.

In addition to "kernel" functions, the formal parameters to HLOE "user" functions are named using the designated suffixes, as are the automatic

variables they allocate. In all cases, these suffixes are appended to the end of the identifier without any separator, and use the same case as the rest of the identifier.

The suffixes used for these HLOE naming conventions are given in the table below:

Table 2: HLOE Notation Suffixes

- F: This suffix applies in the many cases where 16-bit SFP data is primarily involved,

e.g., functions

divf, addf, and mulf. - U: This suffix is used when single-byte unsigned data is involved. HLOE "kernel" function

divu is an example. It works properly

for 8-bit unsigned integers ranging from 0-255, but does not divide 8-bit signed integers properly. Several single-byte "user" function parameters in "multibot.asm"

are also named in this way. Channel number parameters are one example. - I: This suffix is used when single-byte signed data is utilized. HLOE "kernel" function

negti is an example.

It negates a signed 8-bit integer. - B: This suffix applies whenever a boolean value is used. These are single-byte values, where 0 implies false and all other values are true.

One example of this suffix is "kernel" function

andb. This function is distinguished from andu (which performs a bitwise AND operation) only by its suffix. - No Suffix: No suffix is used in situations where the identifier involves single-byte data, and there is no need to make any further distinction about type.

HLOE "kernel" function

add, for instance, performs single-byte addition, and this works for both signed and unsigned values. It is therefore simply

add, as opposed to addu or addi, since those names would understate the capabilities of this function. The eq function

is named following the same rule; it performs a bit-level comparison of two bytes for equality, and therefore works for any single - byte type.

Conversion functions follow a naming convention built around these suffixes as well. Examples are utof, which converts an unsigned byte to its SFP equivalent,

and ftou which reverses this operation.

Call Mechanism

The basic call mechanism already present in the SFP and "Scrapnet" articles is augmented, in this latest article, by a new system that allows for "user"

functions to access their parameters using calls to kernel function parm. Calling

parm with a (byte) value of 4 atop stack 0, for example,

will result in the 4 being consumed by parm, and replaced by parameter number 4. The parameters to each function instance are indexed from the top of stack 0 down, in byte order.

This approach to parameterization is more organized than the variety of approaches seen in the "kernel" functions. These use the same calling convention as the "user"

functions, i.e. they accept parameters atop the main stack and replace them with return values, if any; but they do not, as a rule, use

parm to access these parameters.

Rather, they employ a variety of ad hoc approaches typical of low-level assembly code. The dichotomy between "user" code and "kernel" code is explored

in great depth below; here, suffice it to say that the kernel functions are written in relatively low-level PIC assembly language, with all of its attendant quirks,

whereas "user" code is very stack-oriented, even "functional" (see [1]) in nature.

Proper operation of the parm function depends on the presence of a base pointer atop stack 1 (the second or auxiliary stack) during the execution of each function call instance.

This pointer is a copy of the main stack top pointer as it stood when the function was originally called at runtime (i.e. right after its parameters were pushed, but before

the function body began to execute). The parm function assumes that this base pointer is atop stack 1. Its presence is endured by several code snippets like the

one shown below, which is in fact a sort of prologue pre-pended to each "user" function in "multibot.asm" that accepts parameters:

movf FSR,w

FAR_CALL conform_i , kpush

The identifier conform_i, in the example above, is the address of the caller function; the FAR_CALL macro uses this identifier to properly manage

the program counter paging registers associated with the function call. This is necessary because each of the functions in "multibot.asm" resides in its own code segment,

for maximum flexibility (and SRAM allocation efficiency) during the MPASM build process. FAR_CALL begins by selecting the correct page for the function being called.

Then, it calls the designated function. After that function returns, the code page of the caller function is restored. Note that this is not done using some sort of temporary storage

location or stack slot (which would introduce potential concurrency issues), but instead relies on the invocation of FAR_CALL in the source code to name the correct

caller function (or, at least, a label within its code page). This was judged a small price to pay in exchange for the resultant benefits, in particular for the way in which

FAR_CALL allows all goto instructions in the caller code and the function code to work correctly, without further thought by the developer.

All of the calls in "multibot.asm" thus use the FAR_CALL macro. Were this no the case, problems would quickly emerge. The object binary uses over 90%

of the PIC16F690's code storage area, and as a result the functions being called are located throughout the multiple pages of storage allocated for code.

Also, it should be noted that FAR_CALL assumes that each function (caller and function) resides within a single 2,048-instruction code page (see [2]).

Otherwise, the guarantee made above with regard to goto may not hold. The author's observation is that the MPASM build tools will generally ensure this to be the case,

unless it is not possible, e.g. if a function is written by the developer that is greater than one code page in size.

Instructions that address the program counter register directly work in terms of 256-instruction pages, since these instructions carry only an 8-bit operand. This is mostly an issue

during the implementation of SRAM-resident lookup tables based on the retlw instruction. These provide a way to store constant data in what is normally code (vs. data) storage.

They can certainly be implemented in the HLOE environment; the powf and logf SFP operations are examples,

and considerable guidance is also available from Microchip Technology (see [3]).

A High-Level Design Dilemma

At this point in the discussion, it should be evident that many higher-level structures are in play here which are not typical of 8-bit PIC assembly language. In the next section,

even more such structures are described. Mechanisms for dynamic allocation, and even for automatic garbage collection are discussed. At the same time, some cumbersome aspects

of the development process are still evident, and seem to demand further abstraction. The need to pass the calling function's name as a parameter into FAR_CALL is an example.

The developer will inevitably set this parameter incorrectly in a few cases (e.g. due to the use code copied from elsewhere), and a whole new category of program bug is therefore

introduced by these higher-level structures. Another difficulty is introduced by SFP: the obscure way in which SFP values are portrayed in the actual code.

These little pitfalls have not been cured by some further abstraction in the code provided, mostly because of the author's decision to present an article written in PIC assembly

language, and not in a higher level language. The systems necessary to remove this problem with FAR_CALL, and many other "accidental"

difficulties (in the terminology of [4]), exist already in the author's own laboratory. Their presentation here, though, would interfere with the stated goal of providing

the reader with a code base written in standard PIC assembly language, and this goal was considered inviolable, at least for the article at hand. Assembly language remains very

much the lingua franca for code running on PIC 16 devices, and this reflects the difficulties inherent to using an existing higher-level language (many of which, like C,

were designed for general-purpose computers) on such a device.

Implementations of existing high-level languages for the PIC 16F690 are, unsurprisingly, somewhat sparse. Microchip Technology itself packages a C compiler with the MPLAB IDE,

but it did not support any PIC 16-series devices

when this was written. Microchip directs developers to the Hi-Tech C compiler, which offers only 24-bit and 32-bit

IEEE floating point data types; these would likely prove too large for the PID application implemented here, which barely fits onto the 16F690 even after the savings associated with

using a smaller (and non-IEEE-compliant) data type. The SourceBoost C/C++ compiler targets the PIC 16F690,

but does not offer any floating point data type at all, despite its makers claims to be competing

with the Hi-Tech compiler. This may reflect SourceBoost's negative assessment of the practicality of floating point data on these devices.

Ultimately, none of these tools offered an easy alternative to the assembler-based code presented in this article. The author was therefore left with a decision between 1)

presenting something in a made-up language of his own (the novelty of which would no doubt distract from his efforts to present the PID implementation), and 2) tolerating

the little difficulties inherent to writing his application in PIC assembly language. The latter option (assembly language, with all its attendant drawbacks) was, of course, the one selected.

To a large extent, these drawbacks are ameliorated by macros and libraries. Even these efforts, though, stop short of what could ultimately be done in PIC assembly language.

This is because the author did indeed eventually abandon his assembly language efforts in favor of his efforts to build a new high-level language and compiler built more closely

around the capabilities of devices like the 16F690. The result was thought to be worthy of its own presentation, as was the application described in this article. If this article

is well-received, it is likely that these further abstractions will become material for another article.

Automatic Garbage Collection

Above, it was suggested that the "user" code present here operates at a higher level of abstraction than other PIC assembly language code, as exemplified

by the "kernel" code. One example of this phenomenon is the way in which "user" functions manage stack 0, and in fact allocate automatic variables

atop stack 0. This is in direct contrast with the static techniques utilized by the kernel.

Though no C++ or Java-style method declaration is evident in the assembly language code, each HLOE kernel or "user" function does have a signature. Function parm,

for example, was described above as accepting a single byte and returning a single byte in exchange; this description is its signature. SFP binary real number operators like addf

and mulf accept four bytes (two dual byte floating point values) and return a single two-byte SPF floating point result. Again, this is a signature.

Each of the HLOE "user" functions present in "multibot.asm" ends with an epilogue section that essentially enforces its signature, while allowing the function body

above it to freely build values atop the stack as necessary.

For example, the function conform_i checks its two-byte SFP real number parameter for conformance to a defined range, and replaces it with a maximum value if it does

not conform to the range. As such, this function accepts a single SFP real number (two bytes) and replaces it with another SFP real number. During its execution,

conform_i

freely allocates values atop the stack, by pushing literal values, calling functions that remove and replace stack values, and so on.

Since this function returns the same number of bytes as it accepts as parameters, the stack points upon return to the caller should be equal to the original base pointer,

i.e. to the top of stack 0 when conform_i was called. When its operations are complete, the body of conform_i does not necessarily leave the stack pointer

in the correct spot for its signature. However, what it does without question is that it leaves the two bytes it wishes to return to its caller atop stack 0. The epilogue section

mentioned above performs the manipulations necessary to ensure that these two bytes are indeed returned to the caller, and that this is done with the stack pointer in the

"correct" spot for its HLOE signature as defined above. Other than placing its proper return values, the body of a HLOE "user" function has leeway to operate

freely upon the stack, provided that it contains the epilogue and prologue sections described here. Ultimately, this represents a form of automatic garbage

collection: automatic variables.

An epilogue exists for each HLOE "user" function. These are mechanical in their construction, and the repetitive code that results is a candidate for further abstraction.

As was the case with the problems inherent to FAR_CALL, though, the necessary abstractions are left for discussion in a possible future article. The control application presented

here is already broad in scope, and to present the full gamut of high level structures entertained by the author in the experimentation that led up to this article would require not so much

an article as an entire book.

However, a few additional high level structures are discussed in the next section. In particular, these relate to the parallelism that exists between the main task and the timer event.

This parallelism is a crucial aspect of the firmware provided here. It enables the PID algorithm to use the full capabilities of the CPU, subject only to the comparatively infrequent

demands of the "Scrapnet" network and the GUI rendered thereon, and for all of this to take place with interrupts enabled 100% of the time.

Finally, a modified version of the kernel function listing given above is inserted below this paragraph. This latest version expands the previous listing into a table that includes each

function's signature:

Table 3: HLOE Kernel Functions Used by the PID Code (With Signatures)

| Name | Description | | Input Bytes | | Output Bytes |

printu | Prints an unsigned byte, in decimal format (ASCII / serial) | | 1 | | 0 |

graphx | Draws a horizontal bar graph (ANSI terminal) | | 4 | | 0 |

graphy | Draws a vertical bar graph (ANSI terminal) | | 4 | | 0 |

mulf | Performs SFP multiplication | | 4 | | 2 |

divf | Performs SFP division | | 4 | | 2 |

addf | Performs SFP addition | | 4 | | 2 |

andu | Performs a bitwise AND operation | | 2 | | 1 |

gtf | Compares SFP floats, returns boolean byte | | 4 | | 1 |

andb | Performs a boolean AND operation on two bytes (non-zero is true) | | 2 | | 1 |

add | Adds bytes (signed or unsigned) | | 2 | | 1 |

printch | Prints an ASCII character | | 1 | | 0 |

copyf | Copies the SFP real number value atop stack 0 | | 2 | | 4 |

parm | Accesses function parameters | | 1 | | 1 |

utof | Converts an unsigned byte to its SFP equivalent | | 1 | | 2 |

ftou | Attempts to convert an SFP value to its unsigned byte equivalent | | 2 | | 1 |

eq | Tests bytes for equality | | 2 | | 1 |

setbit | Sets a single bit of a byte (and returns the result) | | 2 | | 1 |

clearbit | Clears a single bit of a byte (and returns the result) | | 2 | | 1 |

iszerof | Returns non-zero if and only if the SFP parameter is 0.0 | | 2 | | 1 |

dispose | Discards the value atop the main stack | | 1 | | 0 |

Functional Programming (FP)

The guidelines under which HLOE "user" code is built reflect a "Functional Programming" (FP) approach (see [1]), which simplifies many aspects of the design. The advantages inherent to FP stem from the high degree of modularity evident in functional code, at the function level.

This modularity pays dividends in many areas. In the application provided, FP was selected because it facilitates the easy management of dynamic storage and of concurrency-related issues. Ultimately, though, FP provides a powerful way of thinking about the entire software development process.

Functions are easier to test than, for example, object methods. The lack of any notion of object state greatly reduces the number of cases that must be dealt with. Pure functions can be defined, implemented, and tested in terms of their inputs and outputs, without reference to external factors like object state.

Real-world applications such as this one almost always use impure elements. The benefits of beginning from a premise of function construction, though, instead of by identifying elements of state to build a class around, are real. Places where the application does deviate from the pure FP ideal serve as the obvious, and well-isolated, potential points-of-failure. These are the parts of the application where special care must be taken to ensure corrections. Everywhere else, certain things can be assumed.

FP in its purest form (as defined in [5]) implies that code consists entirely of calls to pure functions. There is no concept of a direct assignment into a memory variable, for example.

Lambda calculus (see [1]), a sort of "'machine code' of functional programming" (Ibid.), extends this concept even further, and builds a powerful computing

infrastructure around higher-order functions (functions that return functions), while hewing tightly to the pure FP ideal.

The "user" code present in "multibot.asm" does not make use of anything resembling higher-order functions, nor does it qualify as "pure" FP under any reasonable definition. It nevertheless enjoys some important advantages

that are very characteristic of FP.

Code that consists purely of function calls does not exhibit the side effects inherent to static

allocation (vs. parameters and automatic variables, as are used here). In other words, such code exhibits

referential transparency: any reference evident in the code refers unambiguously ("transparently")

to a single actual parameter of a standalone function call instance.

Functional code is thus inherently reentrant, again with the caveat that things like I/O have their own built-in management issues,

especially in a concurrent environment. FP does not absolve the developer of HLOE "user" code from the need to manage resources, but it does absolve the developer of the need

to worry about race conditions involving static variables, among other trivialities.

HLOE "user" code follows the FP ideal closely, in that it allocates most of its storage dynamically, on the main stack, in the form of function parameters and automatic

variables. In this way concurrency issues associated with the use of static memory locations are avoided. This is a fundamental way in which the functional programming approach facilitates

parallelism.

For example, if a "user" function were to use a static memory location as a sort of temporary holding location, as is common in many algorithms outside the functional

programming paradigm, then it might be possible for event handler code to intervene and corrupt the contents of the static location. The top level "user" code in the program

provided (the main task, the event handlers, and the non-kernel functions) does not use static storage in this way, with just one key exception discussed shortly below, and it is thus

immune to such concerns.

In a true functional program, in the strictest sense, no variables per se are declared, only function parameters. Such a program relies entirely on the stack for storage

allocation and is thus reentrant. There are no static storage locations to cause side effects at all.

In practice, it seems probable that no useful development tool can really enjoy all of the advantages of a pure functional approach, at least not if it intends to be useful

for systems programming. If a function call results in I/O, for instance, or if it makes some hardware resource unavailable or unreliable for interrupting code, or even if code accesses

a named PIC register, then this code has a side effect, in a very real way, despite the fact that a superficially functional approach may have been followed.

Such unavoidable side effects, and the associated concurrency issues,

are sometimes raised

as potential criticisms of the functional approach.

But the fact that a practical functional language still has some, unavoidable side effects that must be managed does not really eliminate the usefulness of the functional approach.

There are inherent concurrency issues, but under FP they are well-bounded. The application designer can use the PIC datasheet as a checklist for potential

inherent concurrency issues (access to the EUSART must be arbitrated, access to each ADC channel must be arbitrated, and so on). While perhaps not completely idiot-proof,

the functional approach is much preferable to the more open-ended set of potential concurrency issues present under many other paradigms.

In addition to these inherently static resources, the "user" code provided in "multibot.asm" does make use of static allocation in another, very-limited way.

Top-level statics are used for communication between the main task and event handler(s) (ticked, setf, and setg). For these static locations,

the old notion of "race conditions" does exist, and some fairly extensive discussion of how these concurrency issues were addressed in given in subsequent sections of this article.

In general, it is advised that static storage locations in HLOE "user" application be limited to locations necessary for event handler / main task communication. Beyond that,

HLOE "user" code must manage the inherent concurrency issues associated with PIC I/O and other peripheral operation, and, relatedly, with the issue of concurrent access to named

PIC registers, but static storage should not be used for utility purposes or for algorithm implementation. Rather, the parameterized function call mechanism and the main stack should be used

to allocate storage dynamically, in the form of actual parameters and automatic variables. By following these guidelines, which are encouraged by the HLOE kernel and its conventions,

the concurrency issues associated with writing HLOE "user" code can be kept well-bounded, while still allowing such development

to take place at a reasonably high level of abstraction.

Concurrency

Most basically, the presence of multiple processors in the circuit designs described here allows for concurrent execution of two main processes. Each of these main processes (which begin

at location hlluserprog) runs constantly, subject only to the action of interrupts. Each main process runs a single PID process in an infinite loop, and in particular runs the actual

calculations associated with it, as opposed to the I/O. Interrupts occur at the times that are appropriate according to the "Scrapnet" protocol, but,

importantly, the main, PID task never waits for any purpose.

On each CPU, the main task sets setf and setg, which provide the event handler I/O code with information to construct the relevant GUI. The usage of these variables

conforms to the HLOE "user" code specification given above. Normally, static allocation at the "user" level is forbidden. It is allowed, though, for purposes

of communication between the main task and the interrupt handler, with the proviso that such static allocation introduces the possibility of concurrency errors above and beyond those

otherwise presented by HLOE "user" code. In this case, safety with respect to concurrent access in ensured by the fact that this communication flows in a single direction only.

Variables setf and setg are assigned to by the main task only, and accessed by the interrupt service routine (ISR) on a read-only, informational basis only.

This is an easy solution to this problem, and is also an example of the necessary developer thought process, in those concurrency-related situations where the guarantees

of the stack-based architecture must be momentarily abandoned.

The variable ticked exhibits similar concurrency issues, but the timing issues associated with this static memory location are much more complex. This problem is taken up again,

and resolved in detail, in a forthcoming section of this article.

Within each CPU, the concurrency system described here allows for a single, preemptible task, along with a full-featured and robust system of event (interrupt) handlers.

It is possible, on even the most rudimentary PIC processors, to wire up a variety of change events, timers, receive events, and such, and the HLOE infrastructure ensures that

these are fired properly (and, of course, with constant latency) at runtime.

Resource Management

In addition to the potential concurrency issues introduced by each static variable, the developer of HLOE "user" code must deal with the inherently static and shared nature

of PIC resources like the PIC UART, ADC, and so on. Very often, these concurrency issues can be handled in simple fashion by assigning clearly delineated functions to the main task and

to the interrupt service routine.

If these inherent issues are effectively managed, then the kernel and the runtime infrastructure have been carefully designed to abstract over all other concurrency-related details.

The kernel functions and the calling / swapping infrastructure make use of static memory locations only in a limited, well-considered, and reentrant manner. In particular, an organized

system of static allocation is used by the kernel and the runtime infrastructure, to allow for the inclusion of traditional PIC assembly language code, with its heavy reliance on static

allocation, into the HLOE kernel.

There are advantages to this architectural dichotomy between stack-based "user" code and the static-based HLOE kernel. Each of these two types of code has its strengths

and weaknesses, and these tend to complement each other. HLOE "user" code is compact, for example, consisting of function calls and main stack operations.

As seen, it is also concurrency-friendly in several key respects, and offers many high-level structures designed for programmer productivity. As such, "user" code

is particularly well-suited to the development of application firmware.

HLOE "user" code is also comparatively slow, though. Much time is spent managing the second stack, cleaning up automatic variables, and so on. Operations are performed

at the function call level, not the opcode level. For this sort of code to perform well, the kernel functions into which it is calling must be as fast and thrifty as possible.

The use of parm to access "user" function parameters provides an example of these generalizations. While relatively compact, and completely safe with respect

to concurrency, calls to parm are also significantly slower than the instructions necessary to access a static memory location, as one might see in "user" code.

At the same time, the implementation of parm, which is an example of "kernel" code, necessarily takes special steps in order to perform well. This implementation

makes compromises in the area of simplicity and legibility. It does not rely on calls to a function to access temporarily important values. Rather, it stores these directly in static memory

locations, and must do so carefully, with full consideration given to the possibility that an interrupt might, at any time, result in potentially destructive calls into the same

"kernel" function.

Like the stack-based dynamic allocation scheme employed by HLOE "user" code, the systems of static allocation used by the "kernel" code are designed to eliminate

any possibility of concurrency-related issues. Furthermore, the kernel's static allocation architecture contains features designed to facilitate the sharing of static memory locations

between multiple functions, using different names.

Kernel Memory Management

This system of sharing relies on the creation of a series of function families, each of which shares a single set of static memory locations. Functions within a family must not call

other functions in this same family, which would end up reusing the same static locations. Management of these issues is the responsibility of the developer of HLOE "kernel" code;

the benefits of doing so are efficiency and correctness.

One such family includes the graphy and graphx "kernel" functions. It has the name

aart. The two members of this function family make

use of the static declarations shown below:

ansiadt udata

aart00 RES .1

aart01 RES .1

aart02 RES .1

The names aart00, aart01, and aart02, of course, are not ideal for actual development. So, before each function that participates in one

of these static allocation families, one will see a variable definition section based on the

#define directive. The beginning of the graphy function,

including the variable definition section, is shown below:

ansiaff CODE

#define flgg3 aart00

#define vert aart01

#define cont aart02

graphy:

movf HLFSR,w

FAR_CALL graphy, kpush

This system of statics, as mentioned, relies on the fact that, for example, graphx does not call into graphy, even indirectly through a third function.

This allows each function instance in the family to have unfettered access to a shared static data store, from call to return, while still allowing for this data store to be

efficiently shared with other code.

Of course, at any point in time a HLOE "kernel" function can be interrupted by the interrupt handler, and the interrupt handler will potentially make calls into the function family.

This situation is handled by ensuring that function call instances that run during the execution of the interrupt handler use their own set of static memory locations.

Such second sets of memory locations, though, are only used for function families called by both the main task and the interrupt service routine. Function families that do not get

called from both of these portions of the code do not require such protection.

The most basic HLOE functions, including single-byte operations like mul, divu, and setbit, use the group of statics shown below this paragraph.

These locations are termed the BLSS, for "Bottom-Level Static Storage". The BLSS family of functions represents the core HLOE library, and its members are called extensively

by both the main task and the ISR. In writing other "kernel" functions, the availability of the BLSS functions is assumed.

ukernl udata

hllblss00 res 1

#ifdef HLLMULTITASK

hllblss00isr res 1

#endif

hllblss01 res 1

#ifdef HLLMULTITASK

hllblss01isr res 1

#endif

hllblss02 res 1

#ifdef HLLMULTITASK

hllblss02isr res 1

#endif

In concurrent applications like the one described here, the other kernel functions are free to assume that the BLSS can be safely called from both the ISR or the main task,

subject only to inherent hardware limitations. This is evident in the last set of declarations shown. Consider what happens, for example, when HLLMULTITASK is defined,

as it is here, indicating that interrupts are in use. In this case, not just three static locations (hllblss00, hllblss01, and hllblss02),

but six, are allocated. The three main task locations just listed are augmented by ISR-specific locations named hllblss00isr, hllblss01isr,

and hllblss02isr. Each of these resides one byte after its main task analog, and this layout is relied on in the implementation of the functions that use the BLSS data store.

Specifically, these functions operate on one set of static locations if called from the main task, and the other if called from the ISR, ensuring that the promised level of safety

is provided by the BLSS functions.

One example BLSS function implementation is shown below. This is function clearbit. In addition to the

#define directives associated with shared statics,

some key decision logic, based around variable in_isr, is evident:

#define margp2 hllblss00

clearbit:

#ifdef HLLMULTITASK

movf in_isr,f

btfsc STATUS,Z

goto clearbit0

return

#undefine margp2

#define margp2 hllblss00+1

clearbit0:

#endif

return

#undefine margp2

Above, note that two copies of the actual function body exist in parallel. These bodies are replaced by three-line comments in the fragment shown above. These body sections are identical

to each other, except that label names must be different. This repetition is one of those opportunities for abstraction to be dealt with in a future article.

In any case, the way in which #define is used in the code shown above ensures that the ISR reads and writes static location hllblss00+1 while the main task uses

hllblss00. In both cases, the clearbit code refers to this location as margp2, which was a name judged meaningful (at least, more so than

hllblss00) during the development of clearbit.

Concurrent I/O

The concurrency burden placed on "user" code by the "serial" I/O kernel routines generally follows the strategy, already outlined, of abstracting over all

concurrency-related issues in as transparent a fashion as possible given physical hardware limits. "User" code must consider how serial output originating from the event

handler(s) will interact with serial output from the main task, if both of these portions of the high-level code do emit output. However, the kernel functions relating to serial I/O

are preemptible, reentrant, and do guarantee that each character output by the high-level code will be emitted on the bus. Interference in constructing character strings evident in the UI,

e.g. ANSI positioning commands, must therefore be considered by the "user" code developer.

The PID Algorithm

The main task consists of an infinite loop beginning at label longf. In the discussion below, this loop is referred to as the "main" loop. Each iteration

of this loop results in the calculation of a single command value. Expressed mathematically, this command value u is calculated as shown below:

This construction is best explained as the sum of three terms: the first term is a product of KP, a constant, and another quantity, the second a product

of KI, another constant, and another quantity, and the third a product of a third constant, KD and some other quantity.

The quantity by which KP is multiplied is e(t), the error, i.e. the distance between the user-commanded position or setpoint and the actual position,

at the present time t. This term is probably the most obvious of the three; it makes sense that the command, u, should vary in direct proportion to the error.

This first term by itself is used to construct the command u in the most basic "proportional" controllers. In such a controller, an error of 1.0 might translate

into a command of 4.0, an error of 2.0 into a command of 8.0, an error of 3.0 into a command of 12.0, and so on. Or, in a controller wired and scaled differently, an error of 1.0 might

result in a command of -1.0, an error of 2.0 in a command of -2.0, and so on. In the former example, KP would be equal to 4.0; the command equals 4.0 times the error.

In the latter example, KP would equal -1.0. In all such controllers some such value KP exists, and it remains constant during normal

operation (as opposed to setup).

The second term consists of KI multiplied by an integral expression. The integral expression represents the sum of all net error observed in the system from

time 0 (in practice, the time when the "Go" button was pushed) to present. At first glance it might seem that adding up the error from time 0 to present throughout the entire

operation period of the controller would quickly result in a very large sum. In practice, this sum is minimized by the fact that the errors being added up can have either positive

or negative sign. Over time, the error present in a well-operating system therefore tends to cancel itself out, and the second, integral term of the PID equation tends toward zero.

If this does not happen, for example if actual position is persistently less than the commanded setpoint over some period of time, then the action of the second, integral term will tend

to command position higher, to a degree that increases over time.

The action of the integral term can even overwhelm the action of the other two terms if necessary; a highly negative second term may be of greater magnitude than a positive first term,

resulting in a net negative command despite the natural command direction indicated by a purely proportional calculation. This is equivalent to the situation in which the helmsman

of a ship notices that the vessel is exhibiting a tendency to rotate counter-clockwise due to a stiff wind blowing on a tall aft superstructure. In such a situation, he will position

the rudder right of center, to encourage clockwise rotation. If, due to a lull in the wind, or perhaps to manual overshoot during a heading change, he finds that the vessel

actually needs to rotate counter-clockwise, the helmsman will move the rudder correspondingly back toward the left. But he may never actually move the rudder to the point

where it is pointing left-of-center, because he knows that the vessel will naturally rotate in the necessary direction without doing so.

This integral term thus has a sort of memory, which serves to fight against any bias imparted into the system by its environment. In a vehicle heading control system, this bias could

be due to a stiff breeze or current, or even an asymmetrical vehicle design. Whatever the case may be, the action of the second term of the PID equation serves to automatically correct

against the bias present in the control system.

The final, differential term consists of constant KD multiplied by a derivative. In discrete terms, this derivative represents the change in the error term

observed in each iteration of the main loop compared to the prior iteration. The differential term, as typically configured, fights against sudden position movements by imparting

into the overall command a slight opposite action. This term is often described as having a "damping" action; it imparts a certain stickiness or hesitancy into the otherwise